A few months back I wrote a blockpost for how to use WithShouldRetry of the Microsoft Graph GraphServiceClient. This was a valid way to use the retry functionality for v4 of the SDK.

Well Microsoft updated there SDK to version 5 which uses the Kiota generator. If you want to know how to upgrade from v4 to v5 you can read all about it in the upgrade v4 to v5 documentation.

In v5 you don’t have fluent helper as WithMaxRetry or WithShouldRetry. I couldn’t find any documentation about it so I asked StackOverflow. Luckily I was not the only one. With some help, I found a way to maximize the retry functionality.

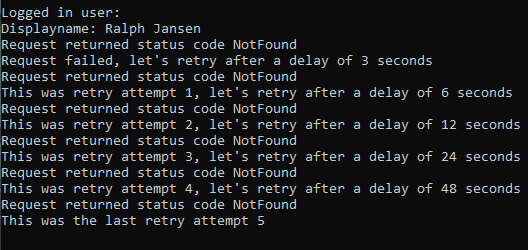

See a simple sample below that will try to get a random user and retries if it can’t be found. It won’t retry if the call is Unauthorized.

// using Microsoft.Kiota.Abstractions;

// using Microsoft.Kiota.Http.HttpClientLibrary.Middleware.Options;

// Use random Guid for user that doesn't exist to force retry

string userId = Guid.NewGuid().ToString();

const int MaxRetry = 5; // So number of call are MaxRetry + 1 (1 is the original call)

RetryHandlerOption retryHandlerOption = new RetryHandlerOption()

{

MaxRetry = MaxRetry,

ShouldRetry = (delay, attempt, httpResponse) =>

{

Console.WriteLine($"Request returned status code {httpResponse.StatusCode}");

// Add more status codes here or change your if statement...

if (httpResponse.StatusCode == System.Net.HttpStatusCode.Unauthorized)

return false;

double delayInSeconds = CalculateDelay(httpResponse, attempt, delay);

if (attempt == 0)

Console.WriteLine($"Request failed, let's retry after a delay of {delayInSeconds} seconds");

else if (attempt == MaxRetry)

{

Console.WriteLine($"This was the last retry attempt {attempt}");

return false;

}

else

Console.WriteLine($"This was retry attempt {attempt}, let's retry after a delay of {delayInSeconds} seconds");

return true;

}

};

var requestOptions = new List<IRequestOption>

{

retryHandlerOption,

};

User? user = await graphClient

.Users[userId]

.GetAsync(requestConfiguration => requestConfiguration.Options = requestOptions);

/// <summary>

/// This is reverse engineered from:

/// https://github.com/microsoftgraph/msgraph-sdk-dotnet-core/blob/dev2/src/Microsoft.Graph.Core/Requests/Middleware/RetryHandler.cs#L164

/// </summary>

/// <param name="response"></param>

/// <param name="retryCount"></param>

/// <param name="delay"></param>

/// <returns></returns>

private static double CalculateDelay(HttpResponseMessage response, int retryCount, int delay)

{

HttpHeaders headers = response.Headers;

double delayInSeconds = delay;

if (headers.TryGetValues(RetryAfter, out var values))

{

var retryAfter = values.First();

if (int.TryParse(retryAfter, out var delaySeconds))

{

delayInSeconds = delaySeconds;

}

}

else

{

var mPow = Math.Pow(2, retryCount);

delayInSeconds = mPow * delay;

}

const int

MaxDelay = 180; // From github code https://github.com/microsoftgraph/msgraph-sdk-dotnet-core/blob/2e43863e349b4b3ebe2e166c26e3afcc4a974365/src/Microsoft.Graph.Core/Requests/Middleware/Options/RetryHandlerOption.cs#L18

delayInSeconds = Math.Min(delayInSeconds, MaxDelay);

return delayInSeconds;

}