This is not the first time that I write about mvc localization validation. The last time that I wrote about this was back in 2012 when I wrote the blog “Localization validation in MVC“. This blog post helped a lot of people with this question on StackOverflow.

In this blog post I create a website with localization for English and Dutch. I will use a DateTime as an example property to display and edit this property for validating the localization. This because the Dutch localization is dd-MM-yyyy and in English we have MM\dd\yyyy.

Technology used

Now I want to write a new post that uses all the latest technology in the MVC world at this moment. At this moment the latest version of Visual Studio is Visual Studio 2013 Update 3. The latest version of MVC is: 5.2.2. This is also the version that we use in combination with .NET Framework 4.5.1.

Setup MVC localization validation

New project

Create a new MVC 5 ASP.NET Web Application in Visual Studio with the use of the .NET Framework 4.5.1. When it is created, update all your Nuget packages about MVC.

Web.config

Some people say that you have to alter the web.config to use localization. This is true when you create a website for a particular localization. In this tutorial I want to create a website that is compatible with multiple cultures from all over the world. Of course you can create a website in English but some people prefer their own language. In this tutorial I will create a website in English and Dutch (I’m from the Netherlands).

So we don’t edit the web.config. We don’t set any culture or UI culture in the web.config. In that way, the default culture is the culture of the pc or (if set) the culture of the browser.

Culture selector

The user should be able to overwrite the default culture from his browser or pc. Maybe the user wants to use another culture than his default culture for your website. So we create some code to create a list with the cultures that you have setup to support in your website.

Code for creating the list of cultures and select a culture different to your browser culture. Add this code in your “_Layout” page in example your menu or footer:

@{

System.Globalization.CultureInfo currentCulture;

var supportedCultures = Website.Helpers.CultureHelper.GetSwitchCultures(out currentCulture);

string currentCultureDisplayName = currentCulture.Parent.NativeName;

}

<ul>

@foreach (var culture in supportedCultures)

{

string url = Url.Action("SetPreferredCulture", "Culture", new { culture = culture.Name, returnUrl = Request.RawUrl });

string urlName = culture.Parent.NativeName;

if (culture.Name == currentCulture.Name)

{

<li class="active"><a href="@url">@urlName <i class="fa fa-check"></i></a></li>

}

else

{

<li><a href="@url">@urlName</a></li>

}

}

</ul>

Create a static class called CultureHelper and past the following code:

using System;

using System.Collections.Generic;

using System.Collections.Specialized;

using System.Diagnostics;

using System.Globalization;

using System.Linq;

using System.Threading;

using System.Web;

namespace Website.Helpers

{

/// <summary>

/// Set the culture of the user for the rest of the application. The culture can be overridden by the user.

/// If it is overridden a cookie is set with the selected culture. For testing don't forget to remove the cookie for selecting the default browser language again.

/// </summary>

public static class CultureHelper

{

#region Constants

/// <summary>

/// The cookie name of the selected culture. By default there isn't any cookie but only the culture of the browser.

/// If the user selects a specific culture, the cookie is added.

/// </summary>

const string CookieName = "PreferredCulture";

#endregion

#region Fields

/// <summary>

/// The supported cultures of this application. If a new localization file is added to the application, the cultureinfo must be added as well.

/// </summary>

public static readonly CultureInfo[] SupportedCultures = new CultureInfo[]

{

CultureInfo.GetCultureInfo("en-US"),

CultureInfo.GetCultureInfo("nl-NL"),

//CultureInfo.GetCultureInfo("de-DE"),

//CultureInfo.GetCultureInfo("fr-FR"),

//CultureInfo.GetCultureInfo("es-ES"),

};

#endregion

#region Public Methods

public static void ApplyUserCulture(this HttpRequest request)

{

ApplyUserCulture(request.Headers, request.Cookies);

}

public static CultureInfo GetMatch(CultureInfo[] acceptedCultures, CultureInfo[] supportedCultures, Func<CultureInfo, CultureInfo, bool> predicate)

{

foreach (var acceptedCulture in acceptedCultures)

{

var match = supportedCultures

.Where(supportedCulture => predicate(acceptedCulture, supportedCulture))

.FirstOrDefault();

if (match != null)

{

return match;

}

}

return null;

}

public static CultureInfo GetMatchingCulture(CultureInfo[] acceptedCultures, CultureInfo[] supportedCultures)

{

return

// first pass: exact matches as well as requested neutral matching supported region

// supported: en-US, de-DE

// requested: de, en-US;q=0.8

// => de-DE! (de has precendence over en-US)

GetMatch(acceptedCultures, supportedCultures, MatchesCompletely)

// second pass: look for requested neutral matching supported _neutral_ region

// supported: en-US, de-DE

// requested: de-AT, en-GB;q=0.8

// => de-DE! (no exact match, but de-AT has better fit than en-GB)

?? GetMatch(acceptedCultures, supportedCultures, MatchesPartly);

}

public static void GetSwitchCultures(out CultureInfo currentCulture, out CultureInfo nextCulture)

{

currentCulture = Thread.CurrentThread.CurrentUICulture;

var currentIndex = Array.IndexOf(SupportedCultures.Select(ci => ci.Name).ToArray(), currentCulture.Name);

int nextIndex = (currentIndex + 1) % SupportedCultures.Length;

nextCulture = SupportedCultures[nextIndex];

}

public static CultureInfo[] GetSwitchCultures(out CultureInfo currentCulture)

{

currentCulture = Thread.CurrentThread.CurrentUICulture;

return SupportedCultures;

}

public static CultureInfo GetUserCulture(NameValueCollection headers)

{

var acceptedCultures = GetUserCultures(headers["Accept-Language"]);

var culture = GetMatchingCulture(acceptedCultures, SupportedCultures);

return culture;

}

public static CultureInfo[] GetUserCultures(string acceptLanguage)

{

// Accept-Language: fr-FR , en;q=0.8 , en-us;q=0.5 , de;q=0.3

if (string.IsNullOrWhiteSpace(acceptLanguage))

return new CultureInfo[] { };

var cultures = acceptLanguage

.Split(',')

.Select(s => WeightedLanguage.Parse(s))

.OrderByDescending(w => w.Weight)

.Select(w => GetCultureInfo(w.Language))

.Where(ci => ci != null)

.ToArray();

return cultures;

}

public static void SetPreferredCulture(this HttpResponseBase response, string cultureName)

{

SetPreferredCulture(response.Cookies, cultureName);

}

#endregion

#region Private Methods

private static void ApplyUserCulture(NameValueCollection headers, HttpCookieCollection cookies)

{

var culture = GetPreferredCulture(cookies)

?? GetUserCulture(headers)

?? SupportedCultures[0];

var t = Thread.CurrentThread;

t.CurrentCulture = culture;

t.CurrentUICulture = culture;

Debug.WriteLine("Culture: " + culture.Name);

}

private static CultureInfo GetCultureInfo(string language)

{

try

{

return CultureInfo.GetCultureInfo(language);

}

catch (CultureNotFoundException)

{

return null;

}

}

private static CultureInfo GetPreferredCulture(HttpCookieCollection cookies)

{

var cookie = cookies[CookieName];

if (cookie == null)

return null;

var culture = GetCultureInfo((string)cookie.Value);

if (culture == null)

return null;

if (!SupportedCultures.Where(ci => ci.Name == culture.Name).Any())

return null;

return culture;

}

private static bool MatchesCompletely(CultureInfo acceptedCulture, CultureInfo supportedCulture)

{

if (supportedCulture.Name == acceptedCulture.Name)

{

return true;

}

// acceptedCulture could be neutral and supportedCulture specific, but this is still a match (de matches de-DE, de-AT, …)

if (acceptedCulture.IsNeutralCulture)

{

if (supportedCulture.Parent.Name == acceptedCulture.Name)

{

return true;

}

}

return false;

}

private static bool MatchesPartly(CultureInfo acceptedCulture, CultureInfo supportedCulture)

{

supportedCulture = supportedCulture.Parent;

if (!acceptedCulture.IsNeutralCulture)

{

acceptedCulture = acceptedCulture.Parent;

}

if (supportedCulture.Name == acceptedCulture.Name)

{

return true;

}

return false;

}

private static void SetPreferredCulture(HttpCookieCollection cookies, string cultureName)

{

var cookie = new HttpCookie(CookieName, cultureName)

{

Expires = DateTime.Now.AddDays(30)

};

cookies.Set(cookie);

Debug.WriteLine("SetPreferredCulture: " + cultureName);

}

#endregion

}

[DebuggerDisplay("Language = {Language} Weight = {Weight}")]

internal class WeightedLanguage

{

public string Language { get; set; }

public double Weight { get; set; }

public static WeightedLanguage Parse(string weightedLanguageString)

{

// de

// en;q=0.8

var parts = weightedLanguageString.Split(';');

var result = new WeightedLanguage { Language = parts[0].Trim(), Weight = 1.0 };

if (parts.Length > 1)

{

parts[1] = parts[1].Replace("q=", "").Trim();

double d;

if (double.TryParse(parts[1], NumberStyles.AllowDecimalPoint, CultureInfo.InvariantCulture, out d))

result.Weight = d;

}

return result;

}

}

}

The “SupportedCultures” field contains the list of supported cultures for your website.

We must also make the Culture of the client available in your project. You have to do this in every request. So go to your Global.asax and add the following event:

/// <summary>

/// Fire on each request.

/// </summary>

protected void Application_OnBeginRequest()

{

// Get the culture of the client

CultureHelper.ApplyUserCulture(Request);

}

Create a new CultureController in your project and paste the following code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web.Mvc;

using Website.Helpers;

namespace Website.Controllers

{

public class CultureController : Controller

{

// GET: /SetPreferredCulture/de-DE

[AllowAnonymous]

public ActionResult SetPreferredCulture(string culture, string returnUrl)

{

Response.SetPreferredCulture(culture);

if (string.IsNullOrEmpty(returnUrl))

return RedirectToAction("Index", "Home");

return Redirect(returnUrl);

}

}

}

Add a new route above the default route in your RouteConfig.cs.

// Set culture route

routes.MapRoute(

name: "SetPreferredCulture",

url: "SetPreferredCulture/{culture}",

defaults: new { controller = "Culture", action = "SetPreferredCulture", culture = UrlParameter.Optional }

);

Create a folder in the root of your project called “Localizations”. Add a Resource file called “Labels.resx” in that folder. This is your English resource file so the default language is the English language. Set the Access Modifier to Public. Copy the Labels resource file for the other languages. For Dutch is this: Labels.nl-NL.resx. So when the user switches from culture or the browser has another culture set, the right resource file is automatically chosen.

DateTime Example

Now we have the right culture of the user, we need an example so we can prove it actually works. So we create an object with a DateTime property. In that way we can display and edit that property in the different cultures. Remember that the Dutch language has a different date format than the English language.

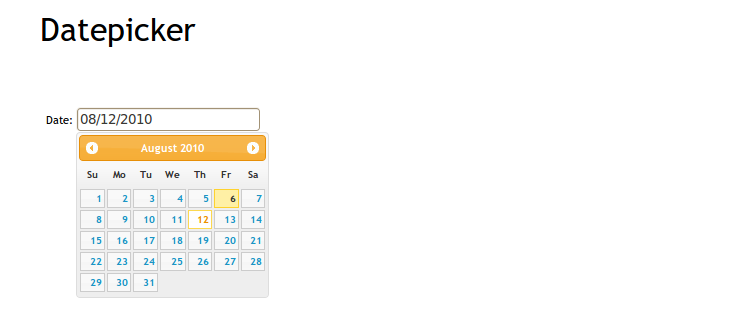

Because all the browser are displaying date textboxes different, we create our own until the HTML 5 specification is finally done. Also, the older browsers don’t support HTML 5 so we must create our own textbox with jQuery UI datepicker.

To do this, we create a new EditorTemplate. If you never done this before, don’t worry it is very easy. Create a new folder called “EditorTemplates” in your “Shared” folder in the “Views” folder. In the EditorTemplates folder, create a new view called “Date”.

Now when a Date field is used for editing, the editortemplate is automatically used. Paste the following code in the Date editortemplate.

@model DateTime?

@if (Model.HasValue)

{

@Html.TextBox("", Model.Value.ToShortDateString(), new { @class = "date form-control" })

}

else

{

@Html.TextBox("", Model, new { @class = "date form-control" })

}

Initialize the Datepicker

Now we have created an editor for editing, the jQuery datepicker should be created. Because we also create the datepicker for a specific culture, the right language file of the jQuery datepicker should be selected as well. You can find the url of the language file in the documentation/source code from the jQuery datepicker documentation page.

Add the following code in the _Layout page just after setting up jQuery and jQuery UI.

@if (!string.IsNullOrWhiteSpace(UICulture))

{

string shortCulture = UICulture.Substring(0, 2);

if (shortCulture != "en")

{

// Only set culture script if it isn't en because en is the default

string url = string.Format("/Scripts/datepicker-{0}.js", shortCulture);

<script src="@url"></script>

}

}

This code finds the right language file and add it to the page for the current (selected) culture.

<script type="text/javascript">

$(document).ready(function () {

$('.date').datepicker({

showOtherMonths: true,

selectOtherMonths: true,

changeMonth: true,

changeYear: true,

numberOfMonths: 1,

showButtonPanel: true

});

});

</script>

This code creates the datepicker in the right culture.

Test the editor

To test the editor, create an object with a DateTime property. See the following example for the class “Member”:

public class Member

{

[Key]

public int Id { get; set; }

[Display(Name = "Voornaam")]

[Required]

[StringLength(50)]

public string FirstName { get; set; }

[Display(Name = "Achternaam")]

[Required]

[StringLength(50)]

public string LastName { get; set; }

[Display(Name = "Geboortedatum")]

[Required]

[DataType(DataType.Date)]

public DateTime BirthDay { get; set; }

}

The EditorTemplate is selected because we have used the “DataType” attribute with the “DataType.Date” value. You could also use the “UIHint” attribute.

If you generate (scaffold) a new Edit or Create view, change the code for the Birthday property to:

<div class="form-group">

@Html.LabelFor(model => model.BirthDay, new { @class = "control-label col-md-2" })

<div class="col-md-10">

@Html.EditorFor(model => model.BirthDay, new { htmlAttributes = new { @class = "form-control" }, })

@Html.ValidationMessageFor(model => model.BirthDay)

</div>

</div>

Now run your project and try to edit or create a member object. You now should see a textbox with a jQuery UI datepicker inside. When you select a date from the datepicker, the format of the date should be the format of the culture you requested. Also the validation should be right for the Culture you have selected.

Conclusion

Now you have a culture independent website with a jQuery datepicker in the culture that the user wants. You can upload this website to every server you want. You don’t have to set any culture on the server because this solution is culture independent. This is easy for me in the Netherlands because now I can create a Dutch website that I can upload to Microsoft Azure (in azure you have en-US culture) without any problems.